TL;DR

- Synthetic users are simulations, not people.

- AI can mimic average behavior, but it misses human context.

- Real insights come from messiness, emotion, and contradiction.

- Use AI to assist research, not replace it.

- Speed and scale are tempting, but confidence matters more

- If you want to truly connect to your customers, you need to talk to them.

Synthetic Users Are Not Users

She told us she hadn’t slept properly in years, was barely coping financially, and didn’t have support. We stopped the session. And no, we didn’t walk away with tactical insights or clean data.

But we did walk away with something more important: a reminder of the chaos and emotional weight real people bring with them when they use products. That’s the part you miss when you swap real users for synthetic ones.

The Rise of Synthetic Research

The idea of “synthetic feedback” — using AI agents to replace real user testing — has gained traction recently. Advocates like Davis Treybig suggest we can simulate interviews, surveys, or even A/B tests without engaging real humans.

Sounds efficient, right? But I’m not convinced. Over the last 14 years in UX and experimentation, I’ve seen firsthand just how messy, irrational, and emotional human behavior is. And AI doesn’t do messy.

Why Synthetic Users Sound Smarter Than They Are

Synthetic users are built by prompting large language models (LLMs) like ChatGPT to generate “representative” user thoughts, feedback, or personas. In theory, these models are trained on vast amounts of human-generated content. So, shouldn’t they reflect human behavior? Here’s the catch:

AI predicts what’s most likely. Humans do what’s least expected.

AI doesn’t get tired. It doesn’t panic at 3 a.m. trying to order groceries while calming a crying baby. It doesn’t drink too much and struggle through a takeaway order (like one real participant did during another study I ran). These are not edge cases. They are everyday humanity.

.jpg)

As behavioral economist Dan Ariely puts it:

“We are fallible, easily confused, not that smart, and often irrational. We are more like Homer Simpson than Superman.”

— from Predictably Irrational

We improvise. We contradict ourselves. We click the wrong thing and get frustrated. These behaviors matter, and they’re often invisible to AI.

Real Example: When Synthetic Insight Fell Short

We once ran research on a diabetes management product that kept insulin cool while traveling. Before we spoke to a single user, we prompted an AI to simulate “user feedback” from the public internet. Here’s what AI flagged:

- Price sensitivity

- Vague marketing terms

- Contradictory return policy

Sounds reasonable. And sure, a couple of those aligned with what we eventually heard from real users. But here’s what the AI missed:

- DIY alternatives: Real users had already hacked the problem with ice packs or FRIO sleeves, so they didn’t see the need for the product.

- Education gap: Many didn’t realize that improper insulin storage affects potency, so they didn’t see the value in the product.

These insights weren’t predictable. They were contextual. They surfaced only through conversation, confusion, and follow-up questions, not through prediction models trained on past text.

Predictive Heatmaps vs Reality: A Visual Mismatch

Let’s talk about another popular synthetic tool: AI-generated heatmaps. These tools attempt to show where users will click or focus on a page, all without involving a single user.

Here’s what happened in one client project. We compared two heatmaps for the same homepage:

- The AI-generated heatmap, built using Microsoft Clarity’s predictive tool, suggested heavy interaction with the shopping cart, the CTA banner, the search bar, “bestseller” CTAs and even some brand logos.

- The real user data, also from Microsoft Clarity, painted a very different picture. It showed almost no engagement with the core content, logos, or “best seller” buttons. Instead, most clicks clustered around the site search, and not much else.

Same page. Same time period. Totally different story.

The AI told us what should happen based on the layout and visual hierarchy. Real users showed us what actually happened — and it was shaped by intent, and context AI couldn’t see.

But Isn’t AI Faster, Cheaper, and Scalable?

Yes. Absolutely. Synthetic feedback has some very real benefits:

- Speed: You can simulate insights in minutes instead of waiting weeks to recruit.

- Cost: No participant incentives, no labs, no moderators.

- Scale: Simulate hundreds or thousands of responses without extra effort.

But those gains come with a trade-off, and it’s not just about quality. It’s about confidence.

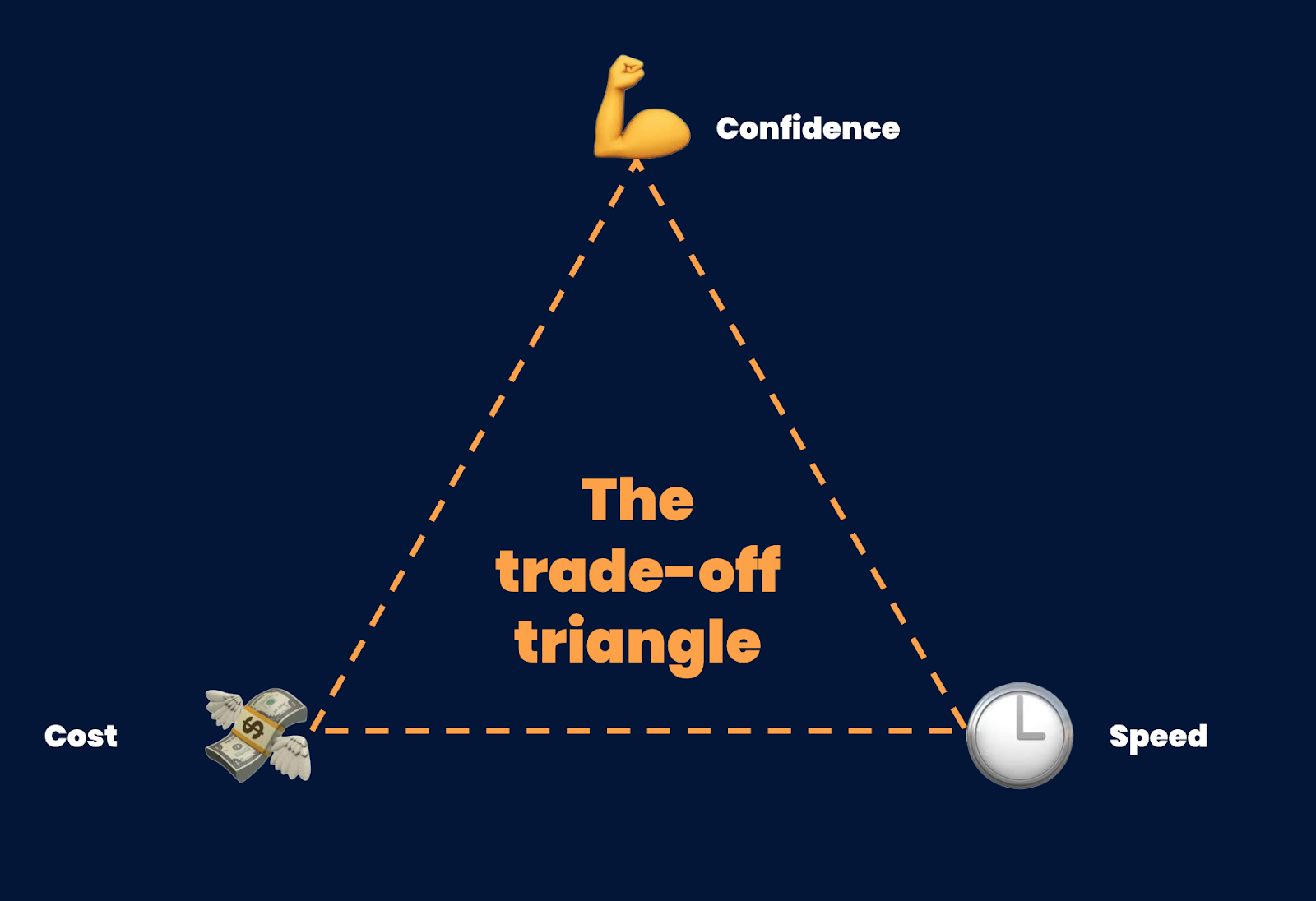

At Speero, we use the trade-off triangle when planning research methodologies:

- Time

- Cost

- Confidence

Synthetic research trades confidence for convenience. That might be fine if the stakes are low. But if you're making key product decisions that affect growth, retention, or trust, you can’t afford to bet on guesses dressed as insights.

“Sending a robot to the Bahamas may be cheaper and faster than going yourself. But what’s the point of doing so, exactly?”

(source)

Use AI as a Tool — Not a Proxy

I’m not anti-AI. I use it every day for:

- Summarising interviews

- Clustering open-text responses

- Streamlining analysis workflows

These are fantastic use cases. But they’re support tools, not replacement tools.

Synthetic users don’t ask unexpected questions. They don’t hesitate. They don’t surprise you.

They’re just mirrors, reflecting what we’ve already told them about ourselves.

The Real Work Happens in the Mess

When people cry in sessions, get distracted by toddlers, or show up a bit drunk, those moments remind us why real user research matters.

Because products don’t live in ideal conditions. They live in the chaos of real life. And understanding that chaos is where the best product insights come from.

So sure, let AI simulate. Let it assist. But if we want to build products people truly connect with, we still have to talk to them.

.svg)