It seems like we have less and less time as time goes. Kids, pretending you’re training 5 times a week, and the new mantra at the watercooler seems to be ‘do more with less’. Then comes the realization that experimentation is more than testing:

- Pull the data, analyze it, find insights, hypothesize

- Research, create roadmaps, plan, strategize

- Communicate insights and test results, get buy-in, build culture

- Develop processes for research, testing, analyzing, and presenting

Then, suddenly, you’re hit with a ‘quick’ question from a client on Slack. Now you’re spending an hour to find that test, it's learning, and data.

There’s a big problem in the world of experimentation: getting quick, reliable, and deep answers from your mountain of testing data. It takes time, it takes collaboration, and it pulls other people away from their work.

But what if you could just ask your entire experimentation history a question in plain language and get a smart, tailored answer in seconds?

This is how an AI-powered Experimentation Operating System (XOS), built right into a platform like Airtable, can change your game. It transforms your data hub from a passive storage unit into an active, conversational partner—one that helps you make faster, better-informed decisions without hunting through spreadsheets or decks.

Instead of spending hours searching for test results or rebuilding learnings, you can instantly surface insights, generate new hypotheses, and identify high-impact opportunities. The result: faster iteration cycles, smarter prioritization, and a culture where experimentation matters.

XOS Helps You Grow and Mature (Your Program)

Building XOS on platforms like Airtable is a powerful driver of growth and program maturation. One of Speero’s clients, Freshworks, faced a similar situation. They wanted to boost their already mature program by streamlining processes, improving efficiency, and increasing testing and ideation speed.

We leveraged our XOS framework to develop Airtable interfaces that simplified workflows, enabled quick idea capture, streamlined reviews, and advanced approved tests efficiently while fostering collaboration.

The result? Freshworks maintained high test velocity, improved tracking, and enhanced efficiency. Greater visibility boosted stakeholder engagement, delivering faster insights and ensuring the program scaled effectively.

How to Talk to Your Test Data

The most immediate and obvious benefit of connecting AI to your experimentation data in Airtable is the ability to have a natural language conversation with your data.

Think about it:

- Someone asks, "Hey, what was that test we ran last quarter that was looking at the product page header copy?"

- Instead of digging through your knowledge base, filtering spreadsheets, and cross-referencing Slack messages, you just copy the question and paste it into the AI chat box.

- In most cases, the response is relevant and accurate enough to guide next steps.

The assistant helps you query your base, generate structured plans, analyze results, and scaffold interfaces & automations directly inside Airtable. Start with past wins or a one-liner idea, then expand into KPI-aligned test concepts or structured briefs with hypothesis, metrics, variants, and QA notes.

Think beyond simple keyword searches. Integrating AI into XOS tools like Airtable lets you ask more open-ended questions and get specific answers. You can use the chat box to "brain dump" an idea or a complex question, and the AI feeds back well-tailored, detailed information.

You can even cluster backlog items into themes like trust, clarity, or information architecture and identify high-traffic pages with low test density to surface the best opportunities.

This capability cuts out a huge chunk of time that used to be spent thrashing around, not even sure what you were looking for until you saw it.

Standardization Makes AI Smarter

Right now, AI can analyze your experimentation data within Airtable’s permissions and context. But the next step—the one that makes the AI truly powerful—is standardization.

When you move to a more standardized XOS with a consistent schema, the AI gets exponentially smarter. Imagine an XOS where:

- Field names are the same across all projects (e.g., "Hypothesis," "Primary Metric," "Test Status").

- Dropdown options are standardized (e.g., "Win," "Loss," "Inconclusive," "Iterate").

You can feed this standardized schema right into the AI's instructions, or its "prompting". You can essentially tell the AI what each field is supposed to do. This means the AI isn't just pattern-matching text; it understands the purpose of the data, which leads to even better answers and insights.

This aligns perfectly with the need for strong Test Phase Gate Blueprint, which defines the phases, stages, and required artifacts (like the test document) for every experiment. The more structured and consistent your inputs, the smarter your AI will be at managing the entire experimentation flywheel.

Other Powerful AI Use Cases

%201.png)

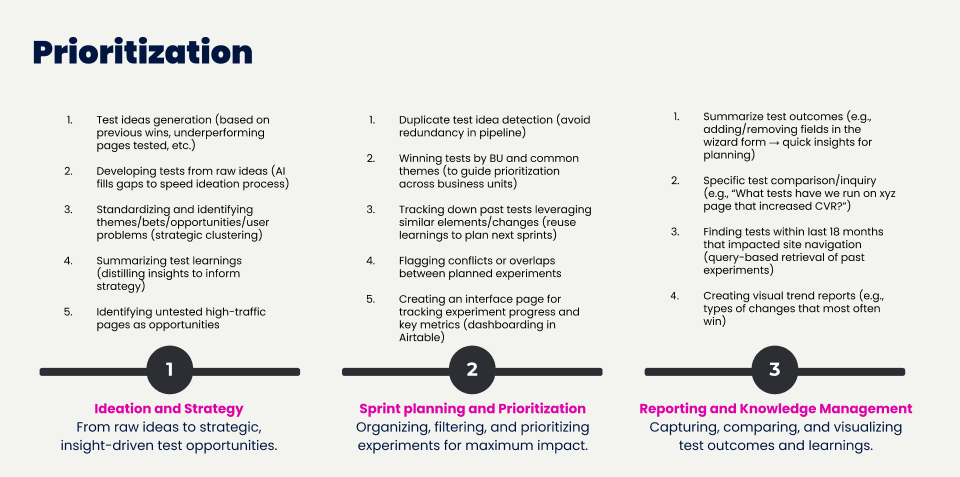

The conversation is just the starting point. You can do a lot more with AI in Airtable:

- Prioritize and avoid duplicates. Airtable AI can reduce redundant testing as it identifies and flags duplicate or overlapping tests early.

- Share learnings. AI can roll up wins or summarize wins/losses by business unit to improve insight sharing between your teams.

- Highlight the next bets. This way, you can leverage past results to hypothesize and direct bigger swings.

- Generate test ideas. Based on prior wins, underperforming pages, etc.

- Develop tests from raw ideas. AI fills gaps to speed the ideation process.

- Standardize and identify themes, bets, opportunities, or user problems (strategic clustering)

- Summarize test learnings (distilling insights to inform strategy)

- Identify untested high-traffic pages as opportunities

- Reuse learnings to plan next sprints. Track down pasts tests with similar elements or changes.

Field AI Agent That Builds Directly in the Airtable

This is where the magic really starts: using the AI tool as a "Field Agent" that can literally build new fields in your Airtable base.

You can also have it set up complex, background-running automation. For instance, you could tell it: "Every time the 'Test Status' field is updated to 'Implemented,' look at the 'Solution Spectrum Tag' field and update a separate tracking table with the number of 'Disruptive' tests that have been shipped."

Once these agents are set up, they run in the background, making your experimentation process more robust without needing constant manual oversight.

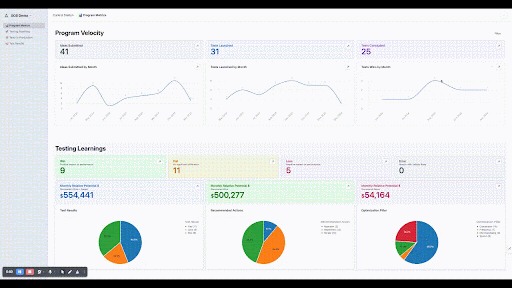

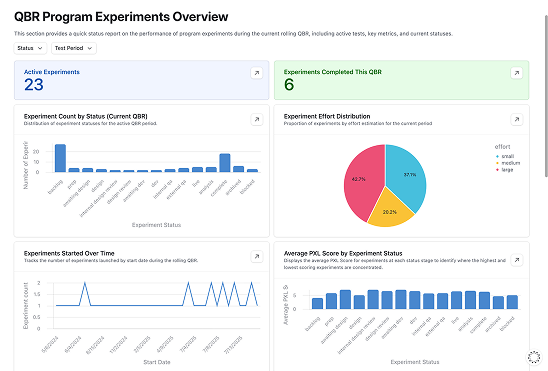

Instant Dashboards and Interfaces

With real, detailed experimentation data, you can ask the AI to "produce a dashboard" with a simple, short prompt—sometimes as little as 50 words.

The result is a complex, data-rich dashboard or "interface page" that tells a story. Instead of manually dragging and dropping charts, the AI can instantly visualize your data, which is an incredible boost to communicating results to leadership and other teams.

This way, you can monitor experiment status from ideation through launch with dashboards showing owners, guardrails, and linked artifacts.

This capability makes it easy to track and report on metrics from the Program Metrics Blueprint, allowing you to monitor the health of your program by visualizing everything from test velocity to the number of ideas in the backlog.

Regarding dashboards, we had a client with the same problem. Tipalti sought to address operational inefficiencies and create a more streamlined, scalable framework to support program growth and evolving needs.

Speero introduced interfaces for design approvals, QA tracking, backlog prioritization, research reviews, and improved data organization, streamlining workflows and enhancing program efficiency.

These solutions created efficiencies across workflows, improving program visibility, enabling faster testing and research processes, and allowing for greater flexibility and scalability within the program’s operations.

Programmatic Structure for Experimentation Success

The reason this AI-powered XOS works so well is that it’s layered on top of a solid, defined structure. This structure is what Speero's blueprints are all about—making your experimentation process clear, repeatable, and measurable.

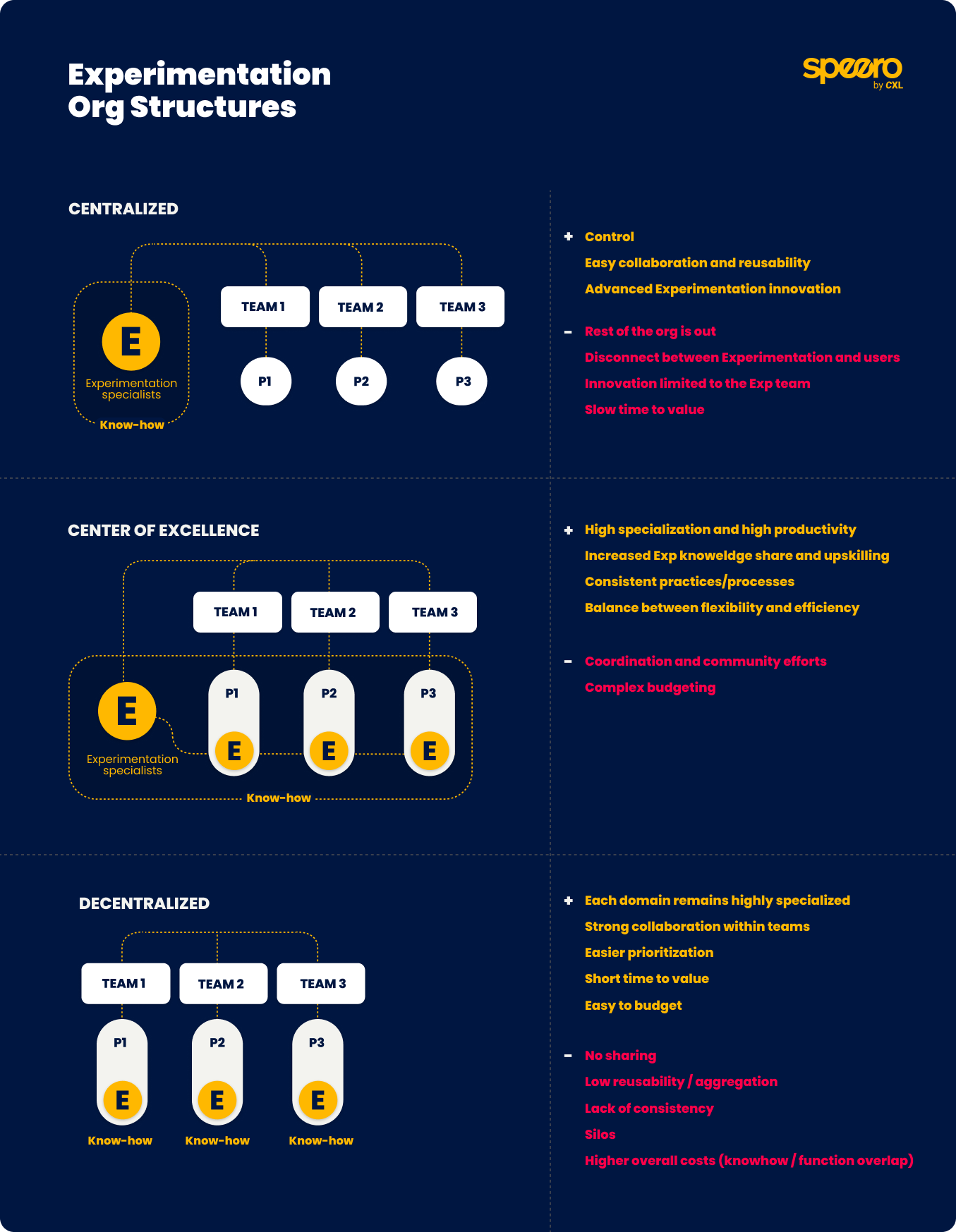

Organizational Clarity with RASCI and Org Charts

As your program grows, defining roles and responsibilities can be a headache. But with a standardized system, you can implement the Experimentation Program RASCI Matrix Blueprint to clearly define who is Responsible, Accountable, Supports, Consulted, and Informed for every activity. This cuts through confusion and increases efficiency.

Similarly, the Org Charts Blueprint gives you a reference for structuring your experimentation capacity—whether you choose a centralized, decentralized, or Center of Excellence (CoE) model.

Measuring Impact: Results vs. Actions and Solution Spectrum

One of the big mistakes new teams make is equating a test's "result" (Win/Loss/Flat) with the "action" taken (Implement/Iterate/Abandon). The goal isn't just to "win," it's to make good decisions that affect change.

The Results Vs Actions Blueprint forces you to separate and tag these two concepts, which is crucial for calculating metrics like win rate vs. action rate and measuring your program's agility.

And to ensure you're not stuck just making small tweaks, the Solution Spectrum Blueprint lets you tag tests as Iterative, Substantial, or Disruptive. This tagging is essential for measuring your 'test portfolio balance' and having the right mix of big bets and small optimizations, especially when business conditions get tough.

Your Data is Safe

This blog post is a product of building AI in Airtable for one of our clients. One of their main questions was “How are you using our data?” Is the data secure? Can we trust LLMs with proprietary information?

Airtable encrypts data in transit and at rest, maintains independent security certifications, and ensures your data there isn’t kept by any of their AI platforms or used in model training.

Most enterprises already trust large language models in some form. The key is shifting perception: if your proprietary data already lives securely in the cloud, connecting it to an LLM doesn’t introduce new risk—it simply unlocks more value from what you already have.

Conclusion

The future of experimentation isn't just about running more tests; it’s about making your process smarter, faster, and more integrated. By building an AI-powered XOS in a platform like Airtable, you move your program from a passive repository of results to an active, conversational system that drives high-impact decisions.

%201.png)

.svg)